False Precision in Modelling

If We Knew Everything, We’d Know Everything!

When I was younger I believed in a kind of physical determinism. I thought that if you knew exactly where everything was and exactly how fast it was going, you could perfectly predict everything. I think this belief persists with many folks in data science, especially those that are also software developers like myself. Anyone who hits the inference button on a statistical package is in danger of being seduced by the arbitrary precision in their output and confusing it with probable reality. Knowing the difference between significant and insignificant figures is something that was ingrained in me when I studied mechanical engineering and the gist of it is applicable here, too.

Two Worlds

I’m unsure of when I was first firmly convinced that my belief in this kind of determinism was - even if perhaps its still possible in the abstract - completely outside of the grasp of human capability. The most obvious candidate I can think of is my fluid dynamics class. Even simple bodies of real-life water are remarkably complex in their dynamics. You can see surface waves going in multiple directions and interacting with each other, the wind casting “cat paws” across that disrupts all of that, and even still there is an unseen set of dynamics occurring beneath the surface. The only hope we have for understanding these systems is with gross and deliberate oversimplification. We build models. These models are effectively toys for understanding. We play with the toys, and hope we learn something about the real world.

In his Statistical Rethinking textbook and YouTube lectures, Richard McElreath illustrates this point by contrasting the two kinds of words we deal with in science: the small world of our model, and the large world that we live in.

Financial Modelling

I am currently playing with a model in R using the Stan statistical package that routinely gives me outputs like this: 2.220446e-16. Without knowing anything about my model or data set, you can’t know how many of these digits are significant. I happen to know that none of them are significant: this value is not distinguishable from zero; it is zero. If you are predicting prices for a stock and using them for entry and exit, how precise do you expect the real-world to be relative to you model? I cannot give precise advice on this, but your model’s residuals are probably a good place to start looking for an answer. The answer isn’t to arbitrarily decide that your model is probably good to ± 1 dollar unless you’ve actually done math that supports that.

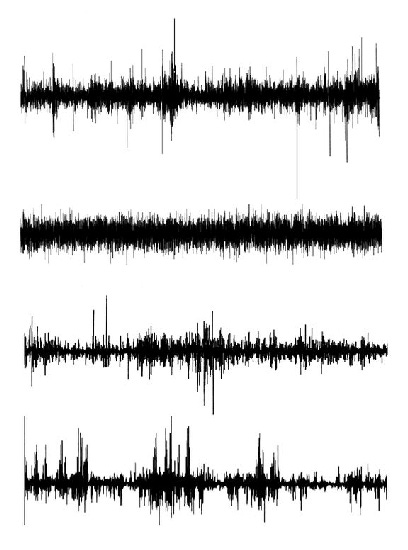

Financial markets are some of the most chaotic systems we can trivially interact with. Almost none of our “small world” models of these markets come even slightly close to matching the Lovecraftian wildness of a random day on Wall Street. Mandelbrot made this point in his “The (Mis)Behavior of Markets” and put forward his multi-fractal model for asset returns that attempts to do better, but it’s difficult to use this model as the basis of anything predictive. Prediction necessarily compresses and simplifies, and his model has fractal randomness at its core. However, his model “looks” much more realistic when you generate charts out of it. Do the same with your own models and I suspect you could train a child to spot the fakes if placed alongside real price charts.

My current approach is to embrace how little I can know about the real world. I do not aspire to capture fractal randomness, I aspire to capture effects that are obvious despite that randomness. I simplify and take all output with enough salt to frighten a cardiologist. For example, I’m currently trying out “binning” my return series into quantiles rather than keeping them continuous; do I really care if my model thinks a forward return will be +20% or +30%? Nope. Both are “super good”, so I’ll bin them as such. This will allow me to prevent the fat tails of returns from teaching my model to keep frugal in its predictions. The output of my model will then also be in terms of “super good” or “mediocre”, which is basically all I can expect out of my small world toy model. If market returns did not have fat tails I probably would not do this, because continuous series can aid convergence.

You cannot avoid that you will never achieve true precision. Your models will happily give you an answer to 16 decimal places, but you’ll be lucky if you’re right in the real world to a single decimal place. Each model and problem needs a different approach for keeping responsible with precision. I hope this post wakes someone up out of that seductive dream that they’re computing real things in their computer models, though I fear I have not been really specific enough to be of precise help!